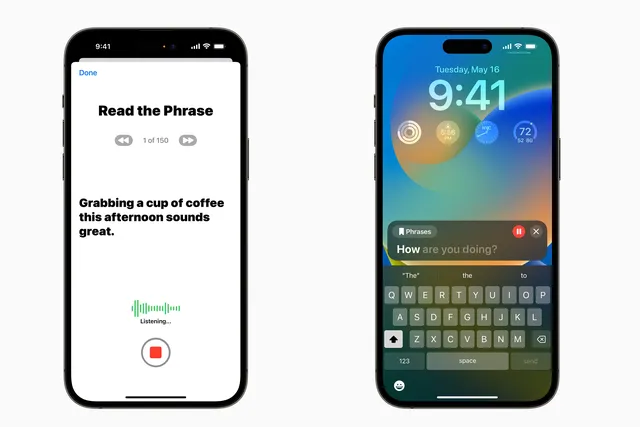

The new feature, called Personal Voice, allows users to read text prompts to record audio, enabling the technology to learn their voice. A related feature, Live Speech, will use this “synthesized voice” to read the user’s typed text aloud during phone calls, FaceTime conversations, and in-person discussions. Users can also save commonly used phrases for live conversations.

This feature is part of Apple’s effort to make its devices more accessible for people with cognitive, vision, hearing, and mobility disabilities. According to Apple, individuals with conditions that may lead to voice loss over time, such as ALS (amyotrophic lateral sclerosis), could particularly benefit from these tools.

“Accessibility is part of everything we do at Apple,” said Sarah Herrlinger, Apple’s senior director of Global Accessibility Policy and Initiatives, in a company blog post. “These groundbreaking features were designed with feedback from members of disability communities every step of the way, to support a diverse set of users and help people connect in new ways.”

Apple stated that these features will be available later this year.

While these tools have the potential to meet significant needs, they also emerge at a time when advancements in artificial intelligence have raised concerns about the misuse of convincing fake audio and video—known as “deepfakes”—to scam or misinform the public.

Apple noted in the blog post that the Personal Voice feature uses “on-device machine learning to keep users’ information private and secure.”

Other tech companies have also explored using AI to replicate voices. Last year, Amazon announced it was working on an update to its Alexa system that would allow the technology to mimic any voice, including that of a deceased family member. However, this feature has not yet been released.

In addition to the voice replication features, Apple introduced Assistive Access, which combines several popular iOS apps—such as FaceTime, Messages, Camera, Photos, Music, and Phone—into one Calls app. This interface includes high-contrast buttons, large text labels, an emoji-only keyboard option, and the ability to record video messages for users who prefer visual or audio communication.

Apple is also updating its Magnifier app for the visually impaired. The app will now feature a detection mode to help users interact more effectively with physical objects. For example, a user could hold their iPhone camera in front of a microwave and move their finger across the keypad while the app labels and announces the text on the buttons.